Humans working collaboratively alongside self-driving robots is here, and an advanced future of intelligent automation is not only imaginable but compelling. Today, global leading brands in manufacturing, warehousing, and logistics rely on material handling automation solutions to achieve operational goals and maintain their competitive edge, with autonomous mobile robots (AMRs) delivering flexibility, reliability, safe working environments, and boosted productivity.

As the use of industrial robots continues to rise, material handling automation technology continues to rapidly advance with higher levels of sophistication, autonomy, and agility in new innovations.

In this blog, we help you understand the basics of AMR artificial intelligence (AI) technology, explaining what you need to know about the different systems that drive today’s AMRs to ensure you select the right solution for your operation.

Are you making a smart choice for mobile automation? What are the differences in AMR intelligence? Is one technology better than the other? Find out how computer vision and LiDAR make your AMR fleet work smarter and safer with our free eBook.

NEW EBOOK

Computer Vision and LiDAR

Discover the different types of sensors typically used on AMRs and the benefits of using many data points for a more complete picture of the world.

How Do Self-Driving Robots Operate in a Facility?

There are different categories of self-driving industrial vehicles—autonomous mobile robots (AMRs) and automated guided vehicles (AGVs)—that serve similar purposes of moving materials within manufacturing, warehousing, and logistics facilities. However, these solutions have distinct differences in how they operate.

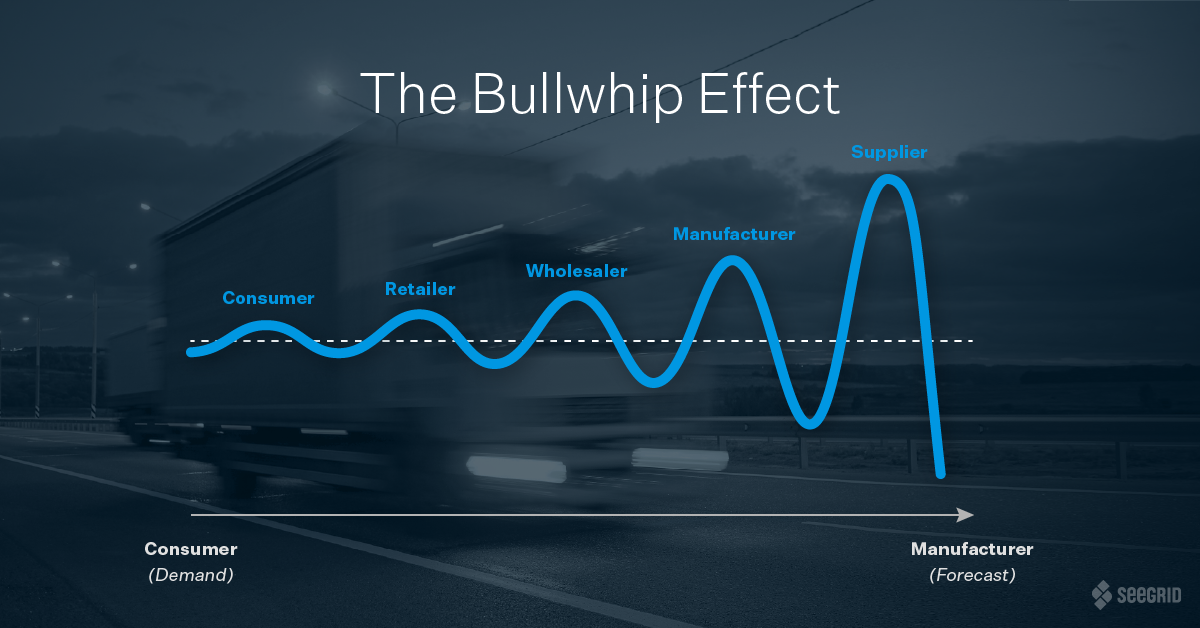

Traditional AGVs follow fixed paths that are created with built-in facility infrastructure, which can include tape, wire, magnets, or reflectors. Installation of physical landmarks that guide AGVs can be a costly investment of time and capital. Not only do companies incur costs associated with reconfiguring their facility and installing equipment, but they also absorb the expense of production downtime to make these updates. Once the AGVs are up and running, if modifications need to be made to a route, the landmarks would need to be removed and rebuilt by the AGV vendor, risking additional downtime. The rigidity of infrastructure-based automation solutions hinders a facility's ability to adopt a continuous improvement mindset, and the lack of agility can give the competition the advantage.

The constantly growing need for supply chain agility and flexibility has driven rapid advancements in material handling automation. Now, many leading companies are turning to AMR robot solutions, which can navigate without any physical landmarks, relying solely on technology that is on-board the robot.

AMRs can sense their surroundings in real time, navigating based on the data gathered through their on-board technology. However, not all AMRs are created equal or navigate—or operate—in the same way. There are major differences in the sensors and technologies used to gather and understand data—including LiDAR and computer vision.

What is LiDAR Navigation for AMRs?

LiDAR stands for “light detection and ranging.” This method uses a laser-based sensor and provides AMRs with a sense of spatial awareness. With LiDAR, lasers scan the surrounding environment, sending out pulses of laser light and timing how long it takes for the light to return to the sensor. It measures the amount of time it takes for the light to travel, calculating the exact distance to the object that is detected.

However, LiDAR has weaknesses, as it provides much sparser information from a limited number of data points than a camera sensor input. Even with several LiDAR sensors stacked together, the resolution of LiDAR doesn’t come close to those of cameras.

LiDAR is extremely precise. However, the precise view has limited visibility with low resolution, which often leads AMRs relying solely on LiDAR to fail in highly dynamic environments, like manufacturing, warehousing, and logistics facilities.

What is Computer Vision Navigation for AMRs?

Computer vision is a form of artificial intelligence (AI) in which software is programmed to help a computer, or in this case a mobile robot, “see,” analyze, and comprehend the content in its visual world. Cameras are used to gather environmental data because of their wide field of view, high resolution, and ability to visualize colors.

When cameras are configured in pairs, it gives the AMR depth perception—seeing a three-dimensional view of the world just like humans do. With multiple pairs of cameras mounted on board, Seegrid AMRs have stereoscopic vision, seeing a three-dimensional environment, but with a more expansive field of view. Multiple camera pairs capture a 360° range of stereoscopic vision in front, behind, above, and next to the robot.

Seegrid AMRs are individually equipped with our proprietary computer vision system, which is a breakthrough robotics technology pioneered by world-renowned roboticist Dr. Hans Moravec, Seegrid’s founder and chief scientist. Seegrid’s AI-based algorithm collects and prioritizes massive amounts of real-world, live data points, enabling Seegrid AMRs to reliably navigate in busy, ever-changing industrial environments.

Sensor Fusion: Combining Cameras and LiDAR

Instead of relying solely on cameras or LiDAR, Seegrid AMRs take a hybrid, sensor fusion approach, taking advantage of the best of what cameras and LiDAR each have to offer. Seegrid leverages the strengths of each sensor to create the safest, most reliable AMRs on the market.

To enhance the robot’s ability to perceive and understand its work, Seegrid’s algorithm leverages our advanced perception software, which fuses data from a combination of LiDAR sensors to enhance the robot’s situational awareness. These precise data points are collected and combined with vision-based data, providing the robots with even more information for an extremely accurate understanding of their immediate surroundings. When robots have an enhanced understanding of their environment, their ability to comprehend and make decisions across a greater number of situations fortifies.

Why It Matters

How well do humans make decisions with a highly limited set of data points? Not very well, and robots don’t come with gut instincts and human intuition. Simply put: the more information an AMR has, the more intelligent it becomes. Seegrid AMRs are designed to gather as much information as possible, providing data for the software systems to determine what is important in the surrounding environment. As a result, with more information and a robust set of instructions, the robot’s decision making is far more reliable.

Seegrid AMRs see and understand more, collecting a higher density of information, prioritizing and filtering data to perform and report on tasks safely and efficiently. Because the data is captured continuously in real time, Seegrid AMRs know what is constant in their environment and what changes. Our AI-based algorithms fuse all the data to deliver an unmatchable fleet that drives operational excellence, continuous improvement, and a competitive edge.

To achieve your operational goals, you need a solution that is flexible, safe, and reliable. Any disruption in your workflows can cause major losses in downtime, productivity, profits, and competitive advantage. Ensure that your automation investment is equipped with sophisticated AI technologies that empower you to achieve efficient and repeatable material movement with less safety incidents and reduced downtime—today and in the future.